Accelerate Artificial Intelligence at the Edge

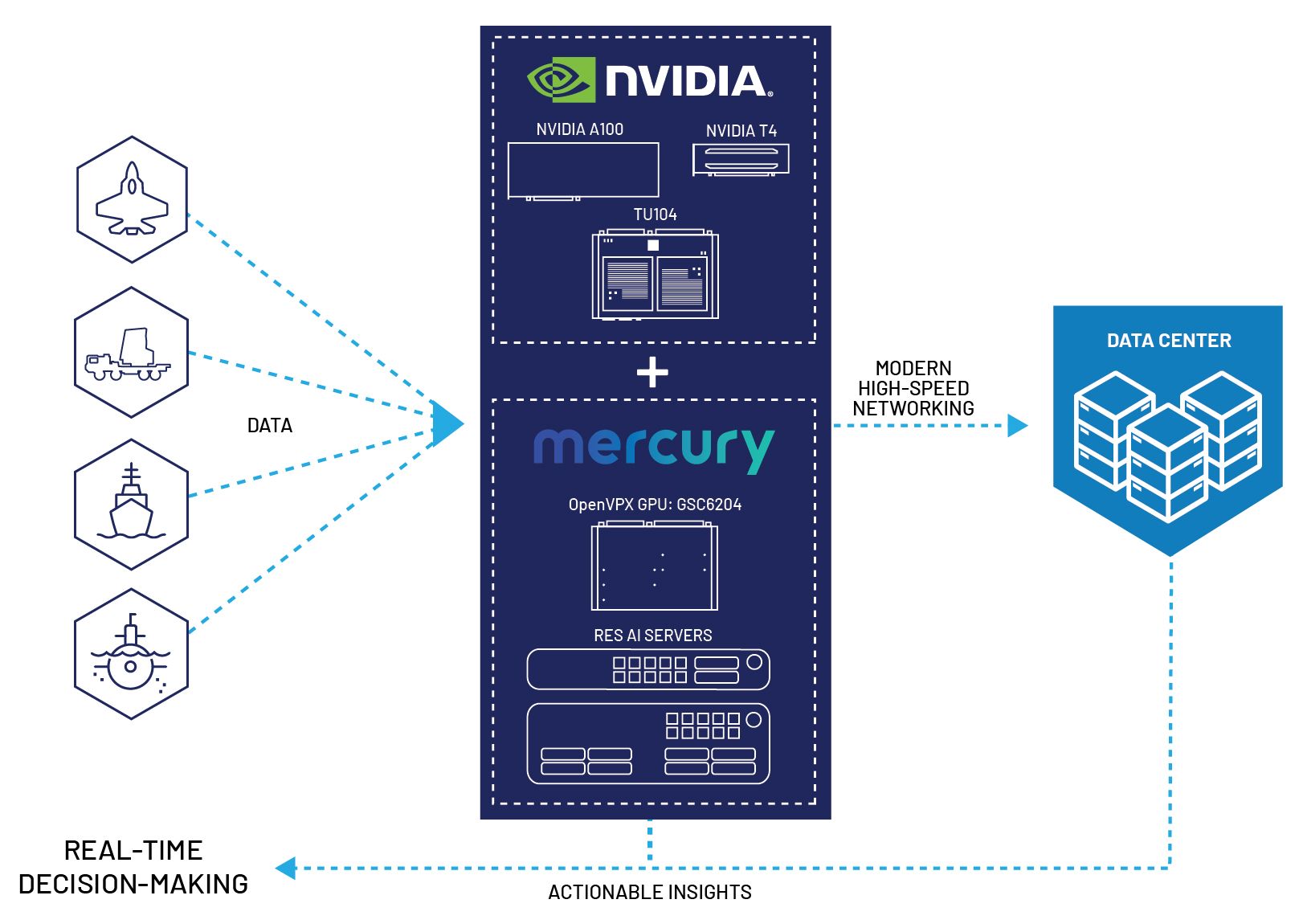

Mercury delivers the latest data center technologies to accelerate artificial intelligence (AI) applications at the edge. Powered by NVIDIA's latest graphics processing units (GPUs), our processing subsystems ensure mission success by enabling real-time critical decision-making in the field, scaling AI applications from cloud to edge.

blog

Taking the Data Center to the Edge with High-Performance Embedded Edge Computing

Read Blog PostWebinar

AI at the Tactical Edge: Securing Machine Learning for Multi-Domain Operations

Watch on demandAI is the future. Learn how Mercury & NVIDIA are making it possible today.

CHALLENGE

Real-time decision-making at the edge

SOLUTION

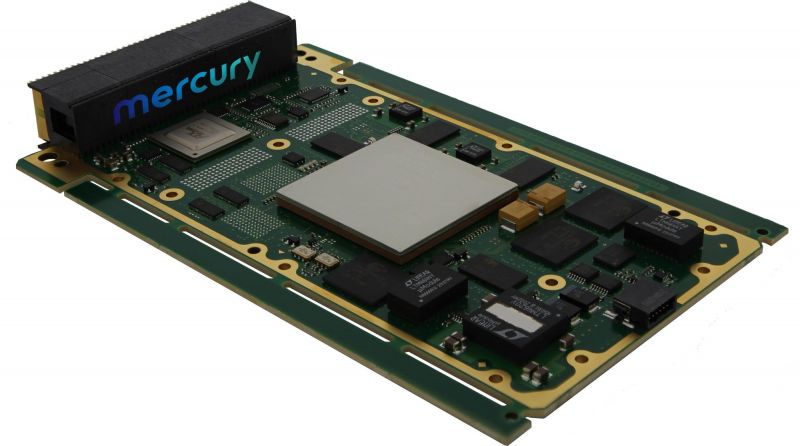

High-performance computing with RES AI servers and OpenVPX GSC6204 modules can process data in the field, at the very edge or quickly transmit data to the cloud to obtain actionable insights.

Handle Massive AI Workloads with NVIDIA GPUs

With early access to new technologies, Mercury aligns product development with NVIDIA's roadmap to provide the most advanced GPU accelerators. We support NVIDIA software development kits including Metropolis which optimizes AI video analytics and JetPack, DeepStream, and TensorRT that enhance video and camera inferencing.

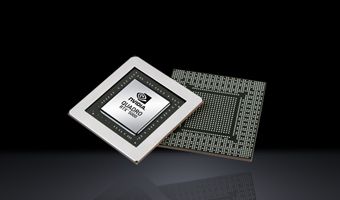

NVIDIA T4 GPU

The T4 GPU accelerator offers the performance of up to 100 CPUs in an energy-efficient 70-watt, PCIe form factor.

NVIDIA A100 GPU

The A100 is the world's first GPU-SmartNIC combined accelerator. Data is analyzed and received at the same time delivering up to 20x higher performance over the previous generation.

Embedded TU104 GPU

The TU104 incorporates NVIDIA’s NVLink high-speed interconnect to deliver high-bandwidth, low-latency connectivity between pairs of GPUs, sharing memory capacity and splitting the workload.

Respond to insights, signals and threats faster

Edge-ready GPU systems integrate the latest commercial technologies to keep pace with evolving AI algorithms, collecting and processing data in the field instantaneously and eliminating the need to transfer to the cloud.

Increase cloud response times and simplify management

Supercomputing systems reduce latency by supporting the latest high-speed networks that adhere to open standards, simplifying integration and allowing you to build an agile IT infrastructure that extends from the cloud to the edge.

Mitigate cyber and supply-chain threats

Mercury's secure solutions are designed and manufactured in defense microelectronics activity (DMEA)-accredited, IPC-1791 U.S. facilities with a traceable and managed supply chain, and can be customized with security features to safeguard critical data and IP.

Rapidly & cost-effectively launch, incorporate and sustain AI

Modular and open-system approach promotes rapid innovation with easily upgradable components that don't require total system replacement. And our global team is available to support and help sustain your AI system up-time no matter where you operate.